Page 103 - Cyber Defense eMagazine September 2025

P. 103

Beyond traditional concerns like code quality and data security, regulators are now assessing intelligent

agents for their potential harmful impacts, ethical implications, and societal consequences. For example,

regulators have data privacy and intellectual property concerns around how AI/ML models are trained,

and are worried about the dangers they create, like discriminatory outcomes (algorithmic bias) or

misinformation (AI hallucinations).

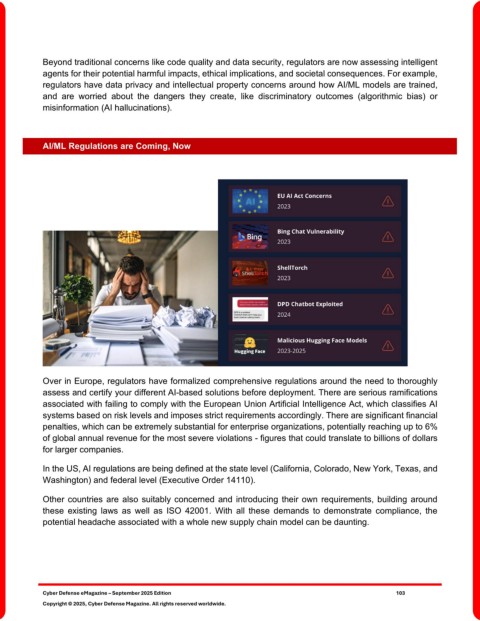

AI/ML Regulations are Coming, Now

Over in Europe, regulators have formalized comprehensive regulations around the need to thoroughly

assess and certify your different AI-based solutions before deployment. There are serious ramifications

associated with failing to comply with the European Union Artificial Intelligence Act, which classifies AI

systems based on risk levels and imposes strict requirements accordingly. There are significant financial

penalties, which can be extremely substantial for enterprise organizations, potentially reaching up to 6%

of global annual revenue for the most severe violations - figures that could translate to billions of dollars

for larger companies.

In the US, AI regulations are being defined at the state level (California, Colorado, New York, Texas, and

Washington) and federal level (Executive Order 14110).

Other countries are also suitably concerned and introducing their own requirements, building around

these existing laws as well as ISO 42001. With all these demands to demonstrate compliance, the

potential headache associated with a whole new supply chain model can be daunting.

Cyber Defense eMagazine – September 2025 Edition 103

Copyright © 2025, Cyber Defense Magazine. All rights reserved worldwide.